Introduction

This book is a collection of all my notes from my degree (computer systems engineering).

It exists for a few purposes:

- To consolidate knowledge

- To aid revision

- To act as a reference during exams

Contributing

If you wish to contribute to this, either to make any additions or just to fix any mistakes I've made, feel free.

The sources are all available on my Github.

CS118

This section is mainly just a reference of some of the more detailed bits of the module. It assumes a pretty strong prior knowledge of object oriented programming so doesn't aim to be comprehensive, it just specifies some details to remember for the exam.

The version of Java on DCS systems at the time of writing is 11. This is also the version these notes refer to.

Useful Resources

- https://en.wikipedia.org/wiki/Single-precision_floating-point_format

- The Oracle documentation for specifics on how Java implements stuff

IEEE 754

IEEE 754 is a standardised way of storing floating point numbers with three components

- A sign bit

- A biased exponent

- A normalised mantissa

| Type | Sign | Exponent | Mantissa | Bias |

|---|---|---|---|---|

| Single Precision (32 bit) | 1 (bit 31) | 8 (bit 30 - 23) | 23 (bit 22- 0) | 127 |

| Double Precision (64 bit) | 1 (bit 63) | 11 (bit 62 - 52) | 52 (51 - 0) | 1023 |

The examples below all refer to 32 bit numbers, but the principles apply to 64 bit.

- The exponent is an 8 bit unsigned number in biased form

- To get the true exponent, subtract 127 from the binary value

- The mantissa is a binary fraction, with the first bit representing , second bit , etc.

- The mantissa has an implicit , so 1 must always be added to the mantissa

Formula

Decimal to Float

The number is converted to a binary fractional format, then adjusted to fit into the form we need. Take 12.375 for example:

- Integer part

- Fraction part

Combining the two parts yields . However, the standard requires that the mantissa have an implicit 1, so it must be shifted to the right until the number is normalised (ie has only 1 as an integer part). This yields . As this has been shifted, it is actually . The three is therefore the exponent, but this has to be normalised (+127) to yield 130 . The number is positive (sign bit zero) so this yields:

| Sign | Biased Exponent | Normalised Mantissa |

|---|---|---|

| 0 | 1000 0010 | 100011 |

Float to Decimal

Starting with the value 0x41C80000 = 01000001110010000000000000000000:

| Sign | Biased Exponent | Normalised Mantissa |

|---|---|---|

| 0 | 1000 0011 | 1001 |

- The exponent is 131, biasing (-127) gives 4

- The mantissa is 0.5625, adding 1 (normalising) gives 1.5625

- gives 25

Special Values

- Zero

- When both exponent and mantissa are zero, the number is zero

- Can have both positive and negative zero

- Infinity

- Exponent is all 1s, mantissa is zero

- Can be either positive or negative

- Denormalised

- If the exponent is all zeros but the mantissa is non-zero, then the value is a denormalised number

- The mantissa does not have an assumed leading one

- NaN (Not a Number)

- Exponent is all 1s, mantissa is non-zero

- Represents error values

| Exponent | Mantissa | Value |

|---|---|---|

| 0 | 0 | |

| 255 | 0 | |

| 0 | not 0 | denormalised |

| 255 | not 0 | NaN |

OOP Principles

Constructors

All Java classes have a constructor, which is the method called upon object instantiation.

- An object can have multiple overloaded constructors

- A constructor can have any access modifier

- Constructors can call other constructors through the

this()method. - If no constructor is specified, a default constructor is generated which takes no arguments and does nothing.

- The first call in any constructor is to the superclass constructor.

- This can be elided, and the default constructor is called

- If there is no default constructor, a constructor must be called explicitly

- Can call explicitly with

super()

- This can be elided, and the default constructor is called

Access Modifiers

Access modifiers apply to methods and member variables.

private: only the members of the class can seepublic: anyone can seeprotected: only class and subclasses can see- Default: package-private, only members of the same package can see

Inheritance

- To avoid the diamond/multiple inheritance problem, Java only allows for single inheritance

- This is done using the

extendskeyword in the class definition - Inherits all public and protected methods and members

- Can, however, implement multiple interfaces

Example:

public class Car extends Vehicle implements Drivable, Crashable{

// insert class body here

}

The Car class extends the Vehicle base class (can be abstract or concrete) and implements the behaviours defined by the interfaces Drivable and Crashable.

static

The static keyword defines a method, a field, or a block of code that belongs to the class instead of the object.

- Static fields share a mutable state accross all instances of the class

- Static methods are called from the class instead of from the object

- Static blocks are executed once, the first time the class is loaded into memory

Polymorphism

Polymorphism: of many forms. A broad term describing a few things in java.

Dynamic Polymorphism

An object is defined as polymorphic if it passes more than one instanceof checks. An object can be referred to as the type of any one of it's superclasses. Say for example there is a Tiger class, which subclasses Cat, which subclasses Animal, giving an inheritance chain of Animal <- Cat <- Tiger, then the following is valid:

Animal a = new Tiger();

Cat c = new Tiger();

Tiger t = new Tiger();

When referencing an object through one of it's superclass types, you can only call objects that the reference type implements. For example, if there was two methods, Cat::meow and Tiger::roar, then:

c.meow() //valid

t.meow() //valid

a.meow() //not valid - animal has no method meow

t.roar() //valid

c.roar() // not valid - cat has no method roar

Even though all these variables are of the same runtime type, they are being called from a reference of another type.

When calling a method of an object, the actual method run is the one that is furthest down the inheritance chain. This is dynamic/runtime dispatch.

public class Animal{

public speak(){return "...";}

}

public class Dog extends Animal{

public speak(){return "woof";}

}

public class Cat extends Animal{

public speak(){return "meow";}

}

Animal a = new Animal();

Animal d = new Dog();

Animal c = new Cat();

a.speak() // "..."

d.speak() // "woof"

c.speak() // "meow"

Even though the reference was of type Animal, the actual method called was the overridden subclass method.

Static Polymorphism (Method Overloading)

Note: different to overridding

- Multiple methods with the same name can be written, as long as they have different parameter lists

- The method that is called depends upon the number of and type of the arguments passed

Example:

public class Addition{

private int add(int x, int y){return x+y;}

private float add(float x, float y){return x+y;}

public static void main(String[] args){

add(1,2); //calls first method

add(3.14,2.72); //calls second method

add(15,1.5); //calls second method

}

}

Abstraction

Abstraction is the process of removing irrelevant details from the user, while exposing the relevant details. For example, you don't need to know how a function works, it's inner workings are abstracted away, leaving only the function's interface and details of what it does.

In the example below, the workings of the sine function are abstracted away, but we still know what it does and how to use it.

float sin(float x){

//dont care really

}

sin(90); // 1.0

Encapsulation

Encapsulation is wrapping the data and the code that acts on it into a single unit. The process is also known as data hiding, because the data is often hidden (declared private) behind the methods that retrieve them (getters/setters).

Reference Variables

There is no such thing as an object variable in Java. Only primitives (int,char,float...), and references. All objects are heap-allocated (new), and a reference to them stored. Methods are all pass by value: either the value of the primitive, or the value of the reference. Java is not pass by reference . Objects are never copied/cloned/duplicated implicitly.

If a reference type is required (ie Integer), but a primitive is given ((int) 1), then the primitive will be autoboxed into it's equivalent object type.

Abstract Classes and Interfaces

- Abstract classes are classes that contain one or more

abstractmethods.- A class must be declared

abstract - Abstract methods have no body, ie are unimplemented.

- The idea of them is to generalise behaviour, and leave it up to subclasses to implement

- Abstract classes cannot be instantiated directly, though can still have constructors for subclasses to call

- A class must be declared

- Interfaces are a special kind of class that contain only abstract methods (and fields declared

public static final)- Used to define behaviour

- Technically can contain methods, but they're default implementations

- This raises all sorts of issues so is best avoided

- Don't have to declare methods abstract, it's implicit

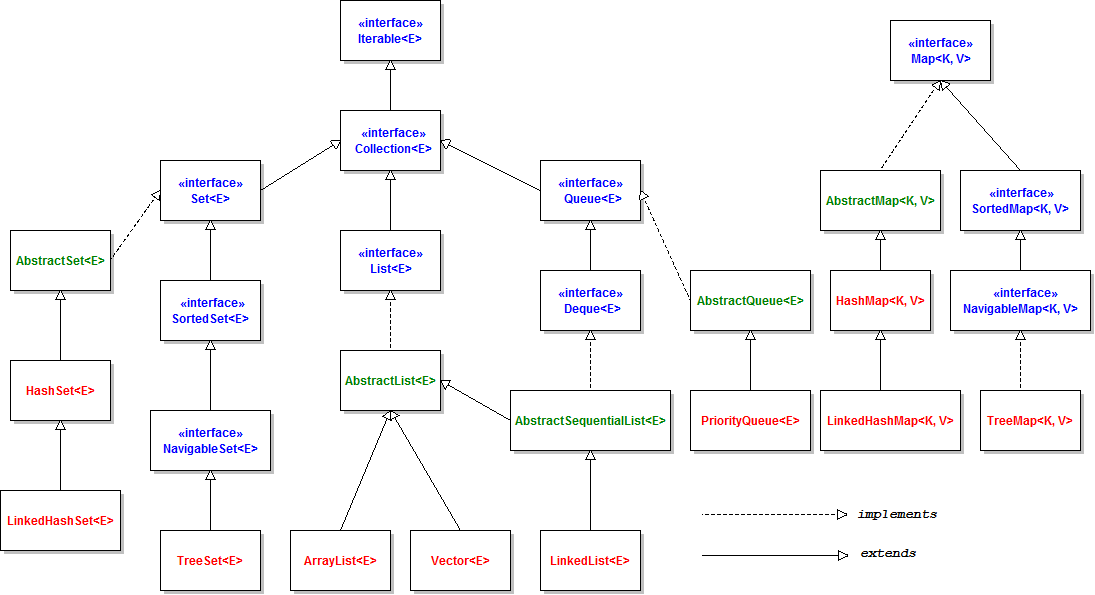

The diagram shows the inheritance hierarchy of the java collections framework, containing interfaces, abstract classes, and concrete classes.

Exceptions

Exceptions

Exceptions are events that occur within the normal flow of program execution that disrupt the normal flow of control.

Throwing Exceptions

Exceptions can occur when raised by other code we call, but an exception can also be raised manually using a throw statement. Any object that inherits, either directly or indirectly, from the Throwable class, can be raised as an exception.

//pop from a stack

public E pop(){

if(this.size == 0)

throw new EmptyStackException();

//pop the item

}

Exception Handling

- Exceptions can be caught using a

try-catchblock - If any code within the

tryblock raises an exception, thecatchblock will be executedcatchblocks must specify the type of exception to catch- Can have multiple catch blocks for different exceptions

- Only 1 catch block will be executed

- A

finallyblock can be included to add any code to execute after the try-catch, regardless of if an exception is raised or not. - The exception object can be queried through the variable

e

try{

//try to do something

} catch (ExceptionA e){

//if an exception of type ExceptionA is thrown, this is executed

} catch (ExceptionB e){

//if an exception of type ExceptionB is thrown, this is executed

} finally{

//this is always executed

}

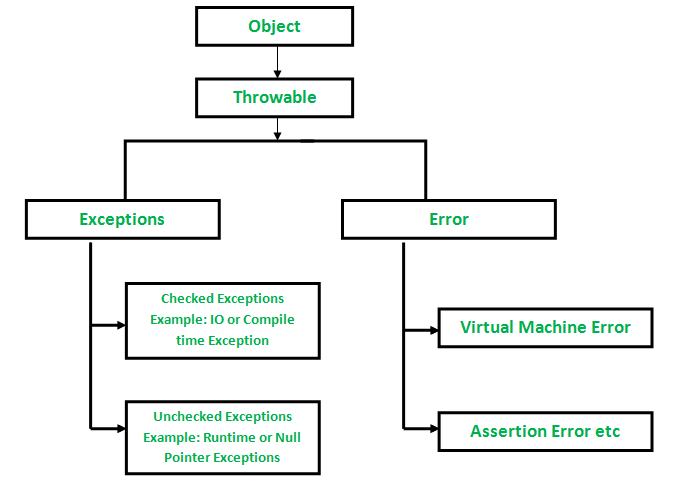

Exception Heirachy

- The

Throwableclass is the parent class of all errors and exceptions in Java - There are two subclasses of

ThrowableError, which defines hard errors within the JVM that aren't really recoverableException, which defines errors that may occur within the code- There are two kinds of exception, checked and unchecked

Checked and Unchecked Exceptions

- Checked exceptions must be either caught or re-thrown

IOExceptionis a good example

- When a method that may throw a checked exception is required, there are two options

- Wrap the possibly exception-raising code in a

try-catch - Use the

throwskeyword in the method definition to indicate that the method may throw a checked exception

- Wrap the possibly exception-raising code in a

public static void ReadFile() throws FileNotFoundException{

File f = new File("non-existant-file.txt")

FileInputStream stream = new FileInputStream(f);

}

// OR

public static void ReadFile(){

File f = new File("non-existant-file.txt")

try{

FileInputStream stream = new FileInputStream(f);

} catch (FileNotFoundException){

e.printStackTrace();

return;

}

}

- Unchecked Exceptions all subclass

RunTimeException- ie

NullPointerExceptionandArrayIndexOutOfBoundsException

- ie

- Can be thrown at any point and will cause program to exit if not caught

Custom Exceptions

- Custom exception classes can be created

- Should subclass

Throwable- Ideally the most specific subclass possible

- Subclassing

Exceptiongives a new checked exception - Subclassing

RunTimeExceptiongives a new unchecked exception

- All methods such as

printStackTraceandgetMessageinherited from superclass - Should provide at least one constructor that overrides a superclass constructor

public class IncorrectFileExtensionException

extends RuntimeException {

public IncorrectFileExtensionException(String errorMessage, Throwable err) {

super(errorMessage, err);

}

}

Generics

Generics allow for classes to be parametrised over some type or types, to provide additional compile time static type checking. A simple box class parametrised over some type E, for example:

public class Box<E>{

E item;

public Box(E item){

this.item = item;

}

public E get(){

return item;

}

public E set(E item){

this.item = item;

}

}

Generic Methods

Methods can be generic too, introducing their own type parameters. The parameters introduced in methods are local to that method, not the whole class. As an example, the static method below compares two Pair<K,V> classes to see if they are equal.

public static <K, V> boolean compare(Pair<K, V> p1, Pair<K, V> p2) {

return p1.getKey().equals(p2.getKey()) &&

p1.getValue().equals(p2.getValue());

}

Type erasure

Type information in generic classes and methods is erased at runtime, with the compiler replacing all instances of the type variable with Object. Object is also what appears in the compiled bytecode. This means that at runtime, any type casting of generic types is unchecked, and can cause runtime exceptions.

CS126

The book Data Structures and Algorithms in Java by Goodrich, Tamassia and Goldwasser is a good resource as it aligns closely with the material. It can be found online fairly easily.

Arrays & Linked Lists

Arrays

Arrays are the most common data structure and are very versatile

- A sequenced collection of variables of the same type (homogenous)

- Each cell in the array has an index

- Arrays are of fixed length and so have a max capacity

- Can store primitives, or references to objects

- When inserting an element into the array, all to the right must be shifted up by one

- The same applies in reverse for removal to prevent null/0 gaps being left

Sorting Arrays

- The sorting problem:

- Consider an array of unordered elements

- We want to put them in a defined order

- For example

[3, 6, 2, 7, 8, 10, 22, 9]needs to become[2, 3, 6, 7, 8, 9, 10, 22]

- One possible solution: insertion sort:

- Go over the entire array, inserting each element at it's proper location by shifting elements along

public static void insertionSort(int[] data){

int n = data.length;

for(int k = 1; k < n; k++){ //start with second element

int cur = data[k]; //insert data[k]

int j = k; //get correct index j for cur

while(j < 0 && data[j-1] > cur){ //data[j-1] must go after cur

data[j] = data[j-1]; // slide data[j-1] to the right

j--; //consider previous j for cur

}

data[j] = cur; //cur is in the right place

}

}

- Insertion sort sucks

- Has worst case quadratic complexity, as k comparisons are required for k iterations.

- When the list is in reverse order (worst case), comparisons are made

- Can do much better with alternative algorithms

Singly Linked Lists

- Linked lists is a concrete data structure consisting of a chain of nodes which point to each other

- Each node stores the element, and the location of the next node

- The data structure stores the head element and traverses the list by following the chain

- Operations on the head of the list (ie, prepending) are efficient, as the head node can be accessed via its pointer

- Operations on the tail require first traversing the entire list, so are slow

- Useful when data needs to always be accessed sequentially

- Generally, linked lists suck for literally every other reason

Doubly Linked Lists

- In a doubly linked list, each node stores a pointer to the node in front of and behind it

- This allows the list to be traversed in both directions, and for nodes to be easily inserted mid-sequence

- Sometimes, special header and trailer "sentinel" nodes are added to maintain a reference to the head an tail of the list

- Also removes edge cases when inserting/deleting nodes as there is always nodes before/after head and tail

Analysis of Algorithms

This topic is key to literally every other one, and also seems to make up 90% of the exam questions (despite there being only 1 lecture on it) so it's very important.

- Need some way to characterise how good a data structure or algorithm is

- Most algorithms take input and generate output

- The run time of an algorithm typically grows with input size

- Average case is often difficult to determine

- Focus on the worst case

- Runtime analysis and benchmarks can be used to determine the performance of an algorithm, but this is often not possible

- Results will also vary from machine to machine

- Theoretical analysis is preferred as it gives a more high-level analysis

- Characterises runtime as a function of input size

Pseudocode

- Pseudocode is a high level description of an algorithm

- Primitive perations are assumed to take unit time

- For example

- Evaluating an expression

- Assigning to a variable

- Indexing into an array

- Calling a method

Looking at an algorithm, can count the number of operations in each step to analyse its runtime

public static double arrayMax(double[] data){

int n = data.length; //2 ops

double max = data[0]; //2 ops

for (int j=1; j < n;j++) //2n ops

if(data[j] > max) //2n-2 ops

max = data[j]; //0 to 2n-2 ops

return max; //1 op

}

- In the best case, there are primitive operations

- In the worst case,

- The runtime is therefore

- is the time to execute a primitive operation

Functions

There are 7 important functions that appear often when analysing algorithms

- Constant -

- A fixed constant

- Could be any number but 1 is the most fundamental constant

- Sometimes denoted where

- Logarithmic -

- For some constant ,

- Logarithm is the inverse of the power function

- Usually, because we are computer scientists and everything is base 2

- Linear -

-

- is a fixed constant

-

- n-log-n -

- Commonly appears with sorting algorithms

- Quadratic -

- Commonly appears where there are nested loops

- Cubic -

- Less common, also appears where there are 3 nested loops

- Can be generalised to other polynomial functions

- Exponential -

-

- is some arbitrary base, is the exponent

-

The growth rate of these functions is not affected by changing the hardware/software environment. Growth rate is also not affected by lower-order terms.

- Insertion sort takes time

- Characterised as taking time

- Merge sort takes

- Characterised as

- The

arrayMaxexample from earlier took time- Characterised as

- A polynomial of degree , is of order

Big-O Notation

- Big-O notation is used to formalise the growth rate of functions, and hence describe the runtime of algorithms.

- Gives an upper bound on the growth rate of a function as

- The statement " is " means that the growth rate of is no more than the growth rate of

- If is a polynomial of degree , then is

- Drop lower order terms

- Drop constant factors

- Always use the smallest possible class of functions

- is , not

- Always use the simplest expression

- is , not

Formally, given functions and , we say that is if there is a positive constant and a positive integer constant , such that

where , and

Examples

is :

The function is not The inequality does not hold, since must be constant.

Big-O of :

Big-O of :

is

Asymptotic Analysis

- The asymptotic analysis of an algorithm determines the running time big-O notation

- To perform asymptotic analysis:

- Find the worst-case number of primitive operations in the function

- Express the function with big-O notation

- Since constant factors and lower-order terms are dropped, can disregard them when counting primitive operations

Example

The th prefix average of an array is the average of the first elements of . Two algorithms shown below are used to calculate the prefix average of an array.

Quadratic time

//returns an array where a[i] is the average of x[0]...x[i]

public static double[] prefixAverage(double[] x){

int n = x.length;

double[] a = new double[n];

for(int j = 0; j < n; j++){

double total = 0;

for(int i = 0; i <= j; i++)

total += x[i];

a[j] = total / (j+1);

}

return a;

}

The runtime of this function is . The sum of the first integers is , so this algorithm runs in quadratic time. This can easily be seen by the nested loops in the function too.

Linear time

//returns an array where a[i] is the average of x[0]...x[i]

public static double[] prefixAverage(double[] x){

int n = x.length;

double[] a = new double[n];

double total = 0;

for(int i = 0; i <= n; i++){

total += x[i];

a[i] = total / (i+1);

}

return a;

}

This algorithm uses a running average to compute the same array in linear time, by calculating a running sum.

Big-Omega and Big-Theta

Big-Omega is used to describe the best case runtime for an algorithm. Formally, is if there is a constant and an integer constant such that

Big-Theta describes the average case of the runtime. is if there are constants and , and an integer constant such that

The three notations compare as follows:

- Big-O

- is if is asymptotically less than or equal to

- Big-

- is if is asymptotically greater than or equal to

- Big-

- is if is asymptotically equal to

Recursive Algorithms

Recursion allows a problem to be broken down into sub-problems, defining a problem in terms of itself. Recursive methods work by calling themselves. As an example, take the factorial function:

In java, this can be written:

public static int factorial(int n){

if(n == 0) return 1;

return n * factorial(n-1);

}

Recursive algorithms have:

- A base case

- This is the case where the method doesn't call itself, and the stack begins to unwind

- Every possible chain of recursive calls must reach a base case

- If not the method will recurse infinitely and cause an error

- A recursive case

- Calls the current method again

- Should always eventually end up on a base case

Binary Search

Binary search is a recursively defined searching algorithm, which works by splitting an array in half at each step. Note that for binary search, the array must already be ordered.

Three cases:

- If the target equals

data[midpoint]then the target has been found- This is the base case

- If the target is less than

data[midpoint]then we binary search everything to the left of the midpoint - If the target is greater than

data[midpoint]then we binary search everything to the right of the midpoint

public static boolean binarySearch(int[] data, int target, int left, int right){

if (left > right)

return false;

int mid = (left + right) / 2;

if(target == data[mid])

return true;

else if (target < data[mid])

return binarySearch(data,target,low,mid-1);

else

return binarySearch(data,target,mid+1,high);

}

Binary search has , as the size of the data being processed halves at each recursive call. After the call, the size of the data is at most .

Linear Recursion

- The method only makes one recursive call

- There may be multiple possible recursive calls, but only one should ever be made (ie binary search)

- For example, a method used in computing powers by repeated squaring:

public static int pow(int x, int n){

if (n == 0) return 1;

if (n % 2 == 0){

y = pow(x,n/2);

return x * y * y;

}

y = pow(x,(n-1)/2);

return y * y;

}

Note how despite multiple cases, pow only ever calls itself once.

Binary Recursion

Binary recursive methods call themselves twice recursively. Fibonacci numbers are defined using binary recursion:

- = 0

public static int fib(int n){

if (n == 0) return 0;

if (n == 1) return 1;

return fib(n-1) + fib(n-2);

}

This method calls itself twice, which isn't very efficient. It can end up having to compute the same result many many times. A better alternative is shown below, which uses linear recursion, and is therefore much much more efficient.

public static Pair<Integer,Integer> linearFib(int n){

if(k = 1) return new Pair(n,0);

Pair result = linearFib(n-1);

return new Pair(result.snd+1, result.fst);

}

Multiple Recursion

Multiple recursive algorithms call themselves recursively more than twice. These are generally very inefficient and should be avoided.

Stacks & Queues

Abstract Data Types (ADTs)

- An ADT is an abstraction of a data structure

- Specifies the operations performed on the data

- Focus is on what the operation does, not how it does it

- Expressed in java with an interface

Stacks

- A stack is a last in, first out data structure (LIFO)

- Items can be pushed to or popped from the top

- Example uses include:

- Undo sequence in a text editor

- Chain of method calls in the JVM (method stack)

- As auxillary storage in multiple algorithms

The Stack ADT

The main operations are push() and pop(), but others are included for usefulness

public interface Stack<E>{

int size();

boolean isEmpty();

E peek(); //returns the top element without popping it

void push(E elem); //adds elem to the top of the stack

E pop(); //removes the top stack item and returns it

}

Example Implementation

The implementation below uses an array to implement the interface above. Only the important methods are included, the rest are omitted for brevity.

public class ArrayStack<E> implements Stack<E>{

private E[] elems;

private int top = -1;

public ArrayStack(int capacity){

elems = (E[]) new Object[capacity];

}

public E pop(){

if (isEmpty()) return null;

E t = elems[top];

top = top-1;

return t;

}

public E push(){

if (top == elems.length-1) throw new FullStackException; //cant push to full stack

top++;

return elems[top];

}

}

- Advantages

- Performant, uses an array so directly indexes each element

- space and each operation runs in time

- Disadvantages

- Limited by array max size

- Trying to push to full stack throws an exception

Queues

- Queues are a first in, first out (FIFO) data structure

- Insertions are to the rear and removals are from the front

- In contrast to stacks which are LIFO

- Example uses:

- Waiting list

- Control access to shared resources (printer queue)

- Round Robin Scheduling

- A CPU has limited resources for running processes simultaneously

- Allows for sharing of resources

- Programs wait in the queue to take turns to execute

- When done, move to the back of the queue again

The Queue ADT

public interface Queue<E>{

int size();

boolean isEmpty();

E peek();

void enqueue(E elem); //add to rear of queue

E dequeue(); // pop from front of queue

}

Lists

The list ADT provides general support for adding and removing elements at arbitrary positions

The List ADT

public interface List<E>{

int size();

boolean isEmpty();

E get(int i); //get the item from the index i

E set(int i, E e); //set the index i to the element e, returning what used to be at that index

E add(int i, E e); //insert an element in the list at index i

void remove(int i); //remove the element from index i

}

Array Based Implementation (ArrayList)

Array lists are growable implementations of the List ADT that use arrays as the backing data structure. The idea is that as more elements are added, the array resizes itself to be bigger, as needed. Using an array makes implementing get() and set() easy, as they can both just be thin wrappers around array[] syntax.

- When inserting, room must be made for new elements by shifting other elements forward

- Worst case (inserting to the head) runtime

- When removing, need to shift elements backward to fill the hole

- Same worst case as insertion,

When the array is full, we need to replace it with a larger one and copy over all the elements. When growing the array list, there are two possible strategies:

- Incremental

- Increase the size by a constant

- Doubling

- Double the size each time

These two can be compared by analysing the amortised runtime of the push operation, ie the average time required for a pushes taking a total time .

With incremental growth, over push operations, the array is replaced times, where is the constant amount the array size is increased by. The total time of push operations is proportional to:

Since is a constant, is , meaning the amortised time of a push operation is .

With doubling growth, the array is replaced times. The total time of pushes is proportional to:

Thus, is , meaning the amortised time is

Positional Lists

- Positional lists are a general abstraction of a sequence of elements without indices

- A position acts as a token or marker within the broader positional list

- A position

pis unaffected by changes elsewhere in a list- It only becomes invalid if explicitly deleted

- A position instance is an object (ie there is some

Positionclass)- ie

p.getElement()returns the element stored at positionp

- ie

- A very natural way to implement a positional list is with a doubly linked list, where each node represents a position.

- Where a pointer to a node exists, access to the previous and next node is fast ()

ADT

public interface PositionalList<E>{

int size();

boolean isEmpty();

Position<E> first(); //return postition of first element

Position<E> last(); //return position of last element

Position<E> before(Position<E> p); //return position of element before position p

Position<E> after(Posittion<E> p); //return position of element after position p

void addFirst(E e); //add a new element to the front of the list

void addLast(E e); // add a new element to the back of the list

void addBefore(Position<E> p, E e); // add a new element just before position p

void addAfter(Position<E> p, E e); // add a new element just after position p

void set(Position<E> p, E e); // replaces the element at position p with element e

E remove(p); //removes and returns the element at position p, invalidating the position

}

Iterators

Iterators are a software design pattern that abstract the process of scanning through a sequence one element at a time. A collection is Iterable if it has an iterator() method, which returns an instance of a class which implements the Iterator interface. Each call to iterator() returns a new object. The iterable interface is shown below.

public interface Iterator<E>{

boolean hasNext(); //returns true if there is at least one additional element in the sequence

E next(); //returns the next element in the sequence, advances the iterator by 1 position.

}

// example usage

public static void iteratorOver(Iterable<E> collection){

Iterator<E> iter = collection.iterator();

while(iter.hasNext()){

E var = iter.next();

System.out.println(var);

}

}

Maps

- Maps are a searchable collection of key-value entries

- Lookup the value using the key

- Keys are unique

The Map ADT

public interface Map<K,V>{

int size();

boolean isEmpty();

V get(K key); //return the value associated with key in the map, or null if it doesn't exist

void put(K key, V value); //associate the value with the key in the map

void remove(K key); //remove the key and it's value from the map

Collection<E> entrySet(); //return an iterable collection of the values in the map

Collection<E> keySet(); //return an iterable collection of the keys in the map

Iterator<E> values(); //return an iterator over the map's values

}

List-Based Map

A basic map can be implemented using an unsorted list.

get(k)- Does a simple linear search of the list looking for the key,value pair

- Returns null if search reaches end of list and is unsuccessful

put(k,v)- Does linear search of the list to see if key already exists

- If so, replace value

- If not, just add new entry to end

- Does linear search of the list to see if key already exists

remove(k)- Does a linear search of the list to find the entry and removes it

- All operations take time so this is not very efficient

Hash Tables

- Recall the map ADT

- Intuitively, a map

Msupports the abstraction of using keys as indices such asM[k] - A map with n keys that are known to be integers in a fixed range is just an array

- A hash function can map general keys (ie not integers) to corresponding indices in a table/array

Hash Functions

A hash function maps keys of a given type to integers in a fixed interval .

-

A very simple hash function is the mod function:

- Works for integer keys

- The integer is the hash value of the key

-

The goal of a hash function is to store an entry at index

-

Function usually has two components:

- Hash code

- keys -> integers

- Compression function

- integers -> integers in range

- Hash code applied first, then compression - Some example hash functions:

- Hash code

-

Memory address

- Use the memory address of the object as it's hash code

-

Integer cast

- Interpret the bits of the key as an integer

- Only suitable with 64 bits

-

Component sum

- Partition they key into bitwise components of fixed length and sum the components

-

Polynomial accumulation

- Partition the bits of the key into a sequence of components of fixed length , , ... ,

- Evaluate the polynomial for some fixed value

- Especially suitable for strings

- Polynomial can be evaluated in time as

Some example compression functions:

- Division

- The size is usually chosen to be a prime to increase performance

- Multiply, Add, and Divide (MAD)

- and are nonnegative integers such that

Collision Handling

Collisions occur when different keys hash to the same cell. There are several strategies for resolving collisions.

Separate Chaining

With separate chaining, each cell in the map points to another map containing all the entries for that cell.

Linear Probing

- Open addressing

- The colliding item is placed in a different cell of the table

- Linear probing handles collisions by placing the colliding item at the next available table cell

- Each table cell inspected is referred to as a "probe"

- Colliding items can lump together, causing future collisions to cause a longer sequence of probes

Consider a hash table that uses linear probing.

get(k)- Start at cell

- Prove consecutive locations until either

- Key is found

- Empty cell is found

- All cells have been unsuccessfully probed

- To handle insertions and deletions, need to introduce a special marker object

defunctwhich replaces deleted elements remove(k)- Search for an entry with key

k - If an entry

(k, v)is found, replace it withdefunctand returnv - Else, return

null

- Search for an entry with key

Double Hashing

- Double hashing uses two hash functions

h()andf() - If cell

h(k)already occupied, tries sequentially the cell for f(k)cannot return zero- Table size must be a prime to allow probing of all cells

- Common choice of second hash func is where q is a prime

- if then we have linear probing

Performance

- In the worst case, operations on hash tables take time when the table is full and all keys collide into a single cell

- The load factor affects the performance of a hash table

- = number of entries

- = number of cells

- When is large, collision is likely

- Assuming hash values are true random numbers, the "expected number" of probes for an insertion with open addressing is

- However, in practice, hashing is very fast and operations have performance, provided is not close to 1

Sets

A set is an unordered collection of unique elements, typically with support for efficient membership tests

- Like keys of a map, but with no associated value

Set ADT

Sets also provide for traditional mathematical set operations: Union, Intersection, and Subtraction/Difference.

public interface Set<E>{

void add(E e); //add element e to set if not already present

void remove(E e); //remove element e from set if present

boolean contains(E e); //test if element e is in set

Iterator<E> iterator(); //returns an iterator over the elements

//updates the set to include all elements of set T

// union

void addAll(Set<E> T);

//updates the set to include only the elements of the set that are also in T

//intersection

void retainAll(Set<E> T);

//updates the set to remove any elements that are also in T

//difference

void removeAll(Set<E> T);

}

Generic Merging

Generic merge is a generalised merge of two sorted lists A and B to implement set operations. Uses a template method merge and 3 auxillary methods that describe what happens in each case:

aIsLess- Called when the element of

Ais less than the element ofB

- Called when the element of

bIsLess- Called when the element of

Bis less than the element ofA

- Called when the element of

bothEqual- Called when the element of

Ais equal to the element ofB

- Called when the element of

public static Set<E> merge(Set<E> A, Set<E> B){

Set<E> S = new Set<>();

while (!A.isEmpty() && !B.isEmpty()){

a = A.firstElement();

b = B.firstElement();

if(a < b){

aIsLess(a,S);

A.remove(a);

}

else if (b < a){

bIsLess(b,S);

B.remove(b);

}

else{ //b == a

bothEqual(a,b,S);

A.remove(a);

B.remove(b);

}

while(!A.isEmpty()){

aIsLess(a,S);

A.remove(a);

}

while(!B.isEmpty()){

bIsLess(b,S);

B.remove(b);

}

}

return S;

}

- Any set operation can be implemented using generic merge

- Union

aIsLessadds a into SbIsLessadds b into SbothEqualadds a (or b) into S

- Intersection

aIsLessandbIsLessdo nothingbothEqualadds a (or b) into S

- Difference

aIsLessadds a into SbIsLessandbothEqualdo nothing

- Runs in linear time, , provided the auxillary methods are

Trees

- A tree is an abstract model of a heirarchical structure

- A tree consists of nodes with a parent-child relationship

- A parent has one or more children

- Each child has only one parent

- The root is the top node in the tree, the only node without a parent

- An internal node has at least one child

- An external node (or leaf) is a mode with no children

- Nodes have ancestors (ie, the parent node of a parent)

- The depth of a node is its number of ancestors

- The height of a tree is its maximum depth

Tree ADT

Tree ADTs are defined using a similar concept to positional lists, as they don't have a natural ordering/indexing in the same way arrays do.

public interface Tree<E>{

int size();

boolean isEmpty();

Node<E> root(); //returns root node

Node<E> parent(Node<E> n); //returns parent of Node n

Iterable<Node<E>> children(Node<E> n); //collection of all the children of Node n

int numChildren(Node<E> n);

Iterator<E> iterator(); //an iterator over the trees elements

Iterator<Node<E>> nodes(); //collection of all the nodes

boolean isInternal(Node<E> n); //does the node have at least one child

boolean isExternal(Node<E> n); //does the node have no children

boolean isRoot(Node<E> n); //is the node the root

}

Tree Traversal

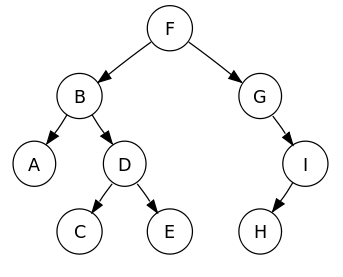

Trees can be traversed in 3 different orders. As trees are recursive data structures, all 3 traversals are defined recursively. The tree below is used as an example in all 3 cases.

Pre-order

- Visit the root

- Pre order traverse the left subtree

- Pre order traverse the right subtree

Pre-order traversal of the tree gives: F B A D C E G I H

In-order

- In order traverse the left subtree

- Visit the root

- In order traverse the right subtree

In-order traversal of the tree gives: A B C D E F G H I

Post-order

- Post order traverse the left subtree

- Post order traverse the right subtree

- Visit the root

Post-order traversal of the tree gives: A C E D B H I G F

Binary Trees

A binary tree is a special case of a tree:

- Each node has at most two children (either 0, 1 or 2)

- The children of the node are an ordered pair (the left node is less than the right node)

A binary tree will always fulfil the following properties:

Where:

- is the number of nodes in the tree

- is the number of external nodes

- is the number of internal nodes

- is the height/max depth of the tree

Binary Tree ADT

The binary tree ADT is an extension of the normal tree ADT with extra accessor methods.

public interface BinaryTree<E> extends Tree<E>{

Node<E> left(Node<E> n); //returns the left child of n

Node<E> right(Node<E> n); //returns the right child of n

Node<E> sibling(Node<E> n); //returns the sibling of n

}

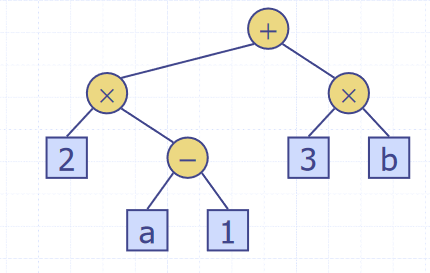

Arithmetic Expression Trees

Binary trees can be used to represent arithmetic expressions, with internal nodes as operators and external nodes as operands. The tree below shows the expression . Traversing the tree in-order will can be used to print the expression infix, and post-order evaluating each node with it's children as the operand will return the value of the expression.

Implementations

- Binary trees can be represented in a linked structure, similar to a linked list

- Node objects are positions in a tree, the same as positions in a positional list

- Each node is represented by an object that stores

- The element

- A pointer to the parent node

- A pointer to the left child node

- A pointer to the right child node

- Alternatively, the tree can be stored in an array

A A[root]is 0- If p is the left child of q,

A[p] = 2 * A[q] + 1 - If p is the right child of q,

A[p] = 2 * A[q] + 2 - In the worst, case the array will have size

Binary Search Trees

- Binary trees can be used to implement a sorted map

- Items are stored in order by their keys

- For a node with key , every key in the left subtree is less than , and every node in the right subtree is greater than

- This allows for support of nearest-neighbour queries, so can fetch the key above or below another key

- Binary search can perform nearest neighbour queries on an ordered map to find a key in time

- A search table is an ordered map implemented using a sorted sequence

- Searches take

- Insertion and removal take time

- Only effective for maps of small size

Methods

Binary trees are recursively defined, so all the methods operating on them are easily defined recursively also.

- Search

- To search for a key

- Compare it with the key at

- If , the value has been found

- If , search the right subtree

- If , search the left subtree

- Insertion

- Search for the key being inserted

- Insert at the leaf reached by the search

- Deletion

- Find the internal node that is follows the key being inserted in an in order traversal (the in order successor)

- Copy key into the in order successor node

- Remove the node copied out of

Performance

- Consider a binary search tree with items and height

- The space used is

- The methods get, put, remove take time

- The height h is in the best case, when the tree is perfectly balanced

- In the worst case, when the tree is basically just a linked list, this decays to

AVL Trees

- AVL trees are balanced binary trees

- For every internal node of the tree, the heights of the subtrees of can differ by at most 1

- The height of an AVL tree storing keys is

- Balance is maintained by rotating nodes every time a new one is inserted/removed

Performance

- The runtime of a single rotation is

- The tree is assured to always have , so the runtime of all methods is

- This makes AVL trees an efficient implementation of binary trees, as their performance does not decay as the tree becomes unbalanced

Priority Queues

A priority queue is an implementation of a queue where each item stored has a priority. The items with the highest priority are moved to the front of the queue to leave first. A priority queue takes a key along with a value, where the key is used as the priority of the item.

Priority Queue ADT

public interface PriorityQueue<K,V>{

int size();

boolean isEmpty();

void insert(K key, V value); //inserts a value into the queue with key as its priority

V removeMin(); //removes the entry with the lowest key (at the front of the queue)

V min(); //returns but not removes the smallest key entry (peek)

}

Entry Objects

- To store a key-value pair, a tuple/pair-like object is needed

- An

Entry<K,V>object is used to store each queue itemKeyis what is used to defined the priority of the item in the queueValueis the queue item

- This pattern is similar to what is used in maps

public class Entry<K,V>{

private K key;

private V value;

public Entry(K key, V value){

this.key = key;

this.value = value;

}

public K getKey(){

return key;

}

public V getValue(){

return value;

}

}

Total Order Relations

- Keys may be arbitrary values, so they must have some order defined on them

- Two entries may also have the same key

- A total order relation is a mathematical concept which formalises ordering on a set of objects where any 2 are comparable.

- A total ordering satisfies the following properties

- or

- Comparability property

- If , then

- Transitive property

- If and , then

- Antisymmetric property

-

- Reflexive property

- or

Comparators

- A comparator encapsulates the action of comparing two objects with a total order declared on them

- A priority queue uses a comparator object given to it to compare two keys to decide their priority

public class Comparator<E>{

public int compare(E a, E b){

if(a < b)

return -1;

if(a > b)

return 1;

return 0;

}

}

Implementations

Unsorted List-Based Implementation

A simple implementation of a priority queue can use an unsorted list

insert()just appends theEntry(key,value)to the list- time

removeMin()andmin()linear search the list to find the smallest key (one with highest priority) to return- Linear search takes time

Sorted List-Based Implementation

To improve the speed of removing items, a sorted list can instead be used. These two implementations have a tradeoff between which operations are faster, so the best one for the application is usually chosen.

insert()finds the correct place to insert theEntry(key,value)in the list to maintain the ordering- Has to find place to insert, takes time

- As the list is maintained in order, the entry with the lowest key is always at the front, meaning

removeMin()andmin()just pop from the front- Takes time

Sorting Using a Priority Queue

The idea of using a priority queue for sorting is that all the elements are inserted into the queue, then removed one at a time such that they are in order

- Selection sort uses an unsorted queue

- Inserting items in each time takes time

- Removing the elements in order

- Overall time

- Insertion sort uses a sorted queue

- Runtimes are the opposite to unsorted

- Adding elements takes time

- Removing elements in each time takes time

- Overall runtime of again

Heaps

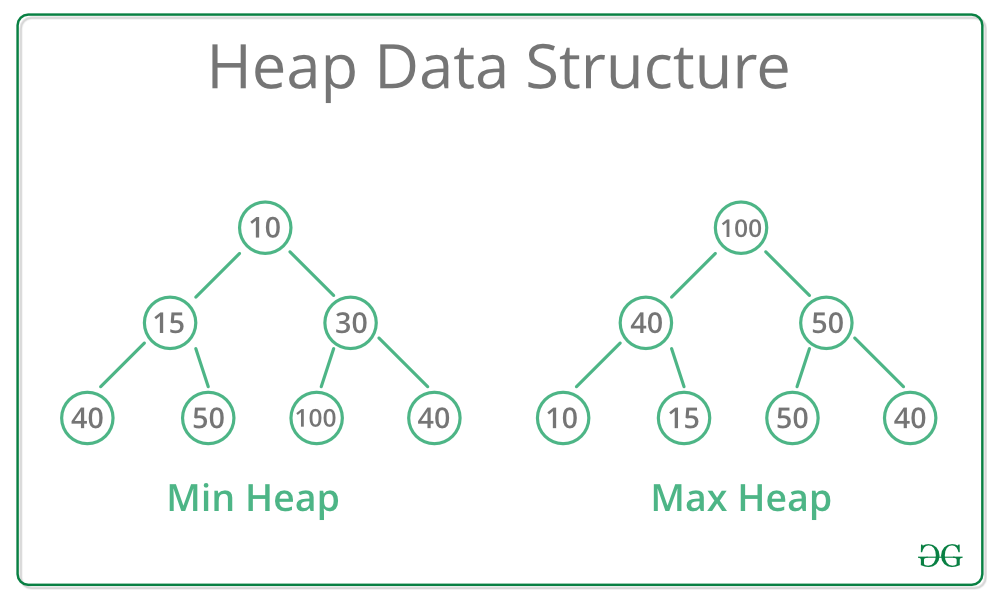

- A heap is a tree-based data structure where the tree is a complete binary tree

- Two kinds of heaps, min-heaps and max-heaps

- For a min-heap, the heap order specifies that for every internal node other than the root,

- In other words, the root of the tree/subtree must be the smallest node

- This property is inverted for max heaps

- Complete binary tree means that every level of the tree, except possibly the last, is filled, and all nodes are as far left as possible.

- More formally, for a heap of height , for there are nodes of depth

- At depth , the internal nodes are to the left of the external nodes

- The last node of a heap is the rightmost node of maximum depth

- Unlike binary search trees, heaps can contain duplicates

- Heaps are also unordered data structures

- Heaps can be used to implement priority queues

- An

Entry(Key,Value)is stored at each node

- An

Insertion

- To insert a node

zinto a heap, you insert the node after the last node, makingzthe new last node- The last node of a heap is the rightmost node of max depth

- The heap property is then restored using the upheap algorithm

- The just inserted node is filtered up the heap to restore the ordering

- Moving up the branches starting from the

z- While

parent(z) > (z)- Swap

zandparent(z)

- Swap

- While

- Since a heap has height , this runs in time

Removal

- To remove a node

zfrom the heap, replace the root node with the last nodew - Remove the last node

w - Restore the heap order using downheap

- Filter the replacement node back down the tree

- While

wis greater than either of its children- Swap

wwith the smallest of its children

- Swap

- While

- Also runs in time

Heap Sort

For a sequence S of n elements with a total order relation on them, they can be ordered using a heap.

- Insert all the elements into the heap

- Remove them all from the heap again, they should come out in order

- calls of insert take time

- calls to remove take time

- Overall runtime is

- Much faster than quadratic sorting algorithms such as insertion and selection sort

Array-based Implementation

For a heap with n elements, the element at position p is stored at cell f(p) such that

- If

pis the root,f(p) = 0 - If

pis the left childq,f(p) = 2*f(q)+1 - If

pis the right childq,f(p) = 2*f(q)+2

Insert corresponds to inserting at the first free cell, and remove corresponds to removing from cell 0

- A heap with

nkeys has length

Skip Lists

- When implementing sets, the idea is to be able to test for membership and update elements efficiently

- A sorted array or list is easy to search, but difficult to maintain in order

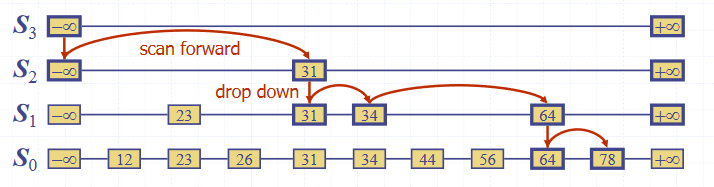

- Skip lists consists of multiple lists/sets

- The skip list

- contains all the elements, plus

- is a random subset of , for

- Each element of appears in with probability 0.5

- contains only

Search

To search for an element in the list:

- Start in the first position of the top list

- At the current position , compare with the next element in the current list

- If , return

- If , move to the next element in the list

- "Scan forward"

- If , drop down to the element below

- "Drop down"

- If the end of the list () is reached, the element does not exist

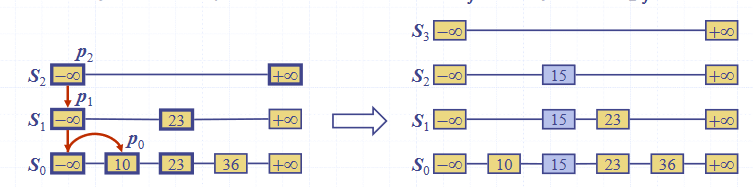

Insertion

To insert an element into the list:

- Repeatedly toss a fair coin until tails comes up

- is the number of times the coin came up heads

- If , add to the skip list new lists

- Each containing only the two end keys

- Search for and find the positions of the items with the largest element in each list

- Same as the search algorithm

- For , insert k into list after position

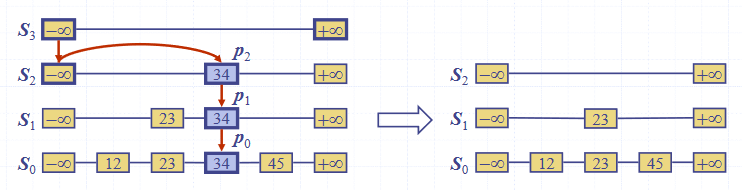

Deletion

To remove an entry from a skip list:

- Search for in the skip list and find the positions of the items containing

- Remove those positions from the lists

- Remove a list if neccessary

Implementation

A skip list can be implemented using quad-nodes, where each node stores

- It's item/element

- A pointer to the node above

- A pointer to the node below

- A pointer to the next node

- A pointer to the previous node

Performance

- The space used by a skip list depends on the random number on each invocation of the insertion algorithm

- On average, the expected space usage of a skip list with items is

- The run time of the insertion is affected by the height of the skip list

- A skip list with items has average height

- The search time in a skip list is proportional to the number of steps taken

- The drop-down steps are bounded by the height of the list

- The scan-forward steps are bounded by the length of the list

- Both are

- Insertion and deletion are also both

Graphs

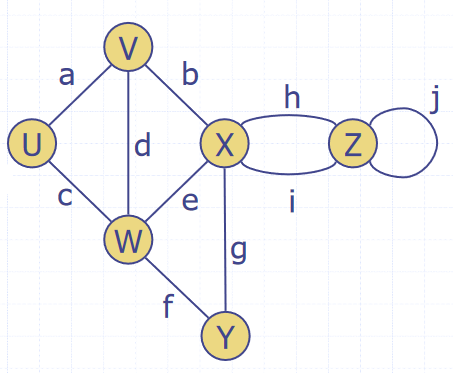

A graph is a collection of edges and vertices, a pair , where

- is a set of nodes, called vertices

- is a collection of pairs of vertices, called edges

- Vertices and edges are positions and store elements

Examples of graphs include routes between locations, users of a social network and their friendships, and the internet.

There are a number of different types of edge in a graph, depending upon what the edge represents:

- Directed edge

- Ordered pair of vertices

- First vertex is the origin

- Second vertex is the destination

- For example, a journey between two points

- Undirected edge

- Unordered pair of vertices

- In a directed graph, all edges are directed

- In an undirected graph, all edged are undirected

Graph Terminology

- Adjacent vertices

- Two vertices and are adjacent (ie connected by an edge)

- Edges incident on a vertex

- The edges connect to a vertex

- , , and are incident on

- End vertices or endpoints of an edge

- The vertices connected to an edge

- and are endpoints of

- The degree of a vertex

- The number of edges connected to it

- has degree 5

- Parallel edges

- Edges that make the same connection

- and are parallel

- Self-loop

- An edge that has the same vertex at both ends

- is a self-loop

- Path

- A sequence of alternating vertices and edges

- Begins and ends with a vertex

- Each edge is preceded and followed by its endpoints

- is a simple path

- Cycle

- A circular sequence of alternating vertices and edges

- A circular path

- A simple cycle is one where all edges and vertices are distinct

- A non-simple cycle contains an edge or vertex more than once

- A graph without cycles (acyclic) is a tree

- A circular sequence of alternating vertices and edges

- Length

- The number of edges in a path

- The number of edges in a cycle

Graph Properties

Notation:

- is the number of vertices

- is the number of edges

- is the degree of vertex

The sum of the degrees of the vertices of a graph is always an even number. Each edge is counted twice, as it connects to two vertices, so . For example, the graph shown has and .

In an undirected graph with no self loops and no multiple edges, . Each vertex has degree at most and . For the graph shown,

The Graph ADT

A graph is a collection of vertices and edges, which are modelled as a combination of 3 data types: Vertex, Edge and Graph.

- A

Vertexis just a box object storing an element provided by the user - An

Edgealso stores an associated value which can be retrieved

public interface Graph{

int numVertices();

Collection vertices(); //returns all the graph's vertices

int numEdges();

Collection<Edge> edges(); //returns all the graph's edges

Edge getEdge(u,v); //returns the edge between u and v, if on exists

// for an undirected graph getEdge(u,v) == getEdge(v,u)

Pair<Vertex, Vertex> endVertices(e); //returns the endpoint vertices of edge e

Vertex oppsite(v,e); //returns the vertex adjacent to v along edge e

int outDegree(v); //returns the number of edges going out of v

int inDegree(v); //returns the number of edges coming into v

//for an undirected graph, inDegree(v) == outDegree(v)

Collection<Vertex> outgoingEdges(v); //returns all edges that point out of vertex v

Collection<Vertex> incomingEdges(v); //returns all edges that point into vertex v

//for an undirected graph, incomingEdges(v) == outgoingEdges(v)

Vertex insertVertex(x); //creates and returns a new vertex storing element x

Edge insertEdge(u,v,x); //creates and returns a new edge from vertices u to v, storing element x in the edge

void removeVertex(v); //removes vertex v and all incident edges from the graph

void removeEdge(e); //removes edge e from the graph

}

Representations

There are many different ways to represent a graph in memory.

Edge List

An edge list is just a list of edges, where each edge knows which two vertices it points to.

- The

Edgeobject stores- It's element

- It's origin

Vertex - It's destination

Vertex

- The edge list stores a sequence of

Edgeobjects

Adjacency List

In an adjacency list, each vertex stores an array of the vertices adjacent to it.

- The

Vertexobject stores- It's element

- A collection/array of all it's incident edges

- The adjacency list stores all

VertexObjects

Adjacency Matrix

An adjacency matrix is an matrix, where is the number of vertices in the graph. It acts as a lookup table, where each cell corresponds to an edge between two vertices.

- If there is an edge between two vertices and , the matrix cell will contain the edge.

- Undirected graphs are symmetrical along the leading diagonal

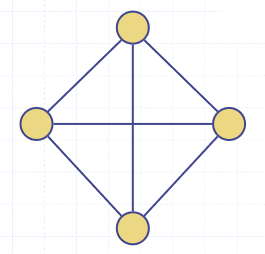

Subgraphs

- A subgraph of a graph is a graph such that:

- The vertices of are a subset of the vertices of

- The edges of are a subset of the edges of

- A spanning subgraph of is a subgraph that contains all the vertices of

- A graph is connected if there is a path between every pair of vertices

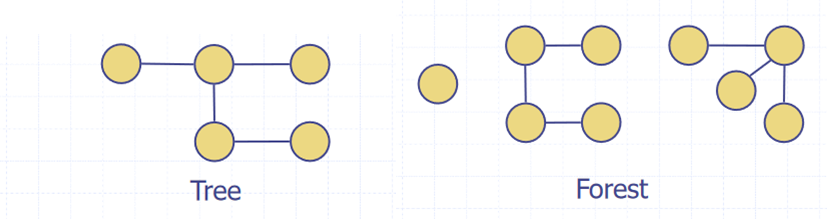

- A tree is an undirected graph such that

- is connected

- has no cycles

- A forest is an undirected graph without cycles

- The connected components of a forest are trees

- A spanning tree of a connected graph is a spanning subgraph that has all vertices covered with a minimum possible number of edges

- A spanning tree is not unique unless the graph is a tree

- Multiple spanning trees exist

- Spanning trees have applications in the design of communication networks

- A spanning forest of a graph is a spanning subgraph that is a forest

- A spanning tree is not unique unless the graph is a tree

Depth First Search

DFS is a general technique for traversing a graph. A DFS traversal of a graph will:

- Visit all vertices and edges of

- Determine whether is connected

- Compute the spanning components of

- Compute the spanning forest of

DFS on a graph with vertices and edges takes time. The algorithm is:

- For a graph and a vertex of

- Mark vertex as visited

- For each of 's outgoing edges

- If has not been visited then

- Record as the discovery edge for vertex

- Recursively call DFS with on

- If has not been visited then

DFS(G,V) visits all vertices and edges in the connected component of v, and the discovery edges labelled by DFS(G,V) form a spanning tree of the connected component of v.

DFS can also be extended to path finding, to find a path between two given vertices and . A stack is used to keep track of the path, and the final state of the stack is the path between the two vertices. As soon as the destination vertex is encountered, the contents of the stack is returned.

DFS can be used for cycle detection too. A stack is used to keep track of the path between the start vertex and the current vertex. As soon as a back edge (an edge we have already been down in the opposite direction) is encountered, we return the cycle as the portion of the stack from the top to the vertex .

To perform DFS on every connected component of a graph, we can loop over every vertex, doing a new DFS from each unvisited one. This will detect all vertices in graphs with multiple connected components.

Breadth First Search

BFS is another algorithm for graph traversal, similar to DFS. It also requires time. The difference between the two is that BFS uses a stack while DFS uses a queue. The algorithm is as follows:

- Mark all vertices and edges as unexplored

- Create a new queue

- Add the starting vertex to the queue

- Mark as visited

- While the queue is not empty

- Pop a vertex from the queue

- For all neighbouts of

- If is not visited

- Push into the queue

- Mark as visited

- If is not visited

For a connected component of graph containing :

- BFS visits all vertices and edges of

- The discovery edges labelled by

BFS(G,s)form a spanning tree of - The path of the spanning tree formed by the BFS is the shortest path between the two vertices

BFS can be specialised to solve the following problems in time:

- Compute the connected components of a graph

- Compute a spanning forest of a graph

- Find a simple cycle in G

- Find the shortest path between two vertices

- DFS cannot do this, this property is unique to BFS

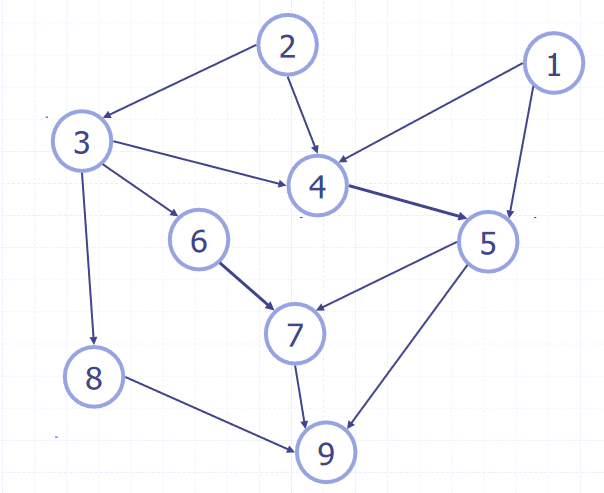

Directed Graphs

A digraph (short for directed graph) is a graph whose edges are all directed.

- Each edge goes in only one direction

- Edge goes from a to b but not from b to a

- If the graph is simple and has vertices and edges,

- DFS and BFS can be specialised to traversing directed edges

- A directed DFS starting at a vertex determines the vertices reachable from

- One vertex is reachable from another if there is a directed path to it

Strong Connectivity

A digraph is said to be strongly connected if each vertex can reach all other vertices. This property can be identified in time with the following algorithm:

- Pick a vertex in the graph

- Perform a DFS starting from

- If theres a vertex not visited, return false

- Let be with all the edge directions reversed

- Perform a DFS starting from in

- If theres a vertex not visited, return false

- Else, return True

Transitive Closure

Given a digraph , the transitive closure of is the digraph such that:

- has the same vertices as

- If has a directed path from to , then G* also has a directed *edge* from to

- In , every pair of vertices with a path between them in is now adjacent

- The transitive closure provides reachability information about a digraph

The transitive closure can be computed by doing a DFS starting at each vertex. However, this takes time. Alternatively, there is the Floyd-Warshall algorithm:

- For the graph , number the vertices

- Compute the graphs

- has directed edge if has a directed path from to with intermediate vertices

- Digraph is computed from

- Add if edges and appear in

In pseudocode:

for k=1 to n

Gk = Gk_1

for i=1 to n (i != k)

for j=1 to n (j != i, j!=k)

if G_(k-1).areAdjacent(vi,vk) && G_(k-1).areAdjacent(vk,vj)

if !G_(k-1).areAdjacent(vi,vj)

G_k.insertDirectedEdge(vi,vj,k)

return G_n

This algorithm takes time. Basically, at each iteration a new vertex is introduced, and each vertex is checked to see if a path exists through the newly added vertex. If it does, a directed edge is inserted to transitively close the graph.

Topological Ordering

- A Directed Acyclic Graph (DAG) is digraph that has no directed cycles

- A topological ordering of a digraph is a numbering of the vertices such that for every edge ,

- The vertex it points to is always greater than it

- A digraph can have a topological ordering if and only if it is a DAG

A topological ordering can be calculated using a DFS:

public static void topDFS(Graph G, Vertex v){

v.visited = true

for(Edge e: v.edges){

w = opposite(v,e)

if(w.visited = false)

topDFS(G,w)

else{

v.label = n

n = n-1

}

}

}

The first node encountered in the DFS is assigned , the one after that , and so on until all nodes are labelled.

CS132

Note that specifics details of architectures such as the 68k, its specific instruction sets, or the PATP are not examinable. They are included just to serve as examples.

The 68008 datasheet can be found here, as a useful resource.

Digital Logic

Digital logic is about reasoning with systems with two states: on and off (0 and 1 (binary)).

Basic Logic Functions

Some basic logic functions, along with their truth tables.

NOT

| A | f |

|---|---|

| 0 | 1 |

| 1 | 0 |

AND

| A | B | f |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

OR

| A | B | f |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

XOR

| A | B | f |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

NAND

| A | B | f |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

NOR

| A | B | f |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 0 |

X-NOR

| A | B | f |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

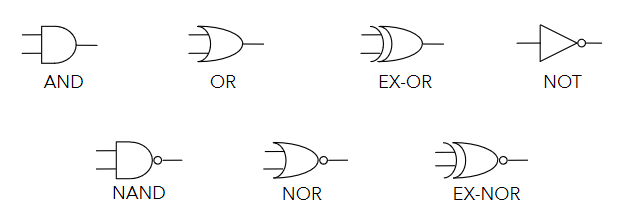

Logic Gates

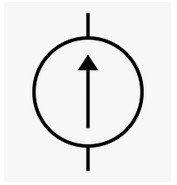

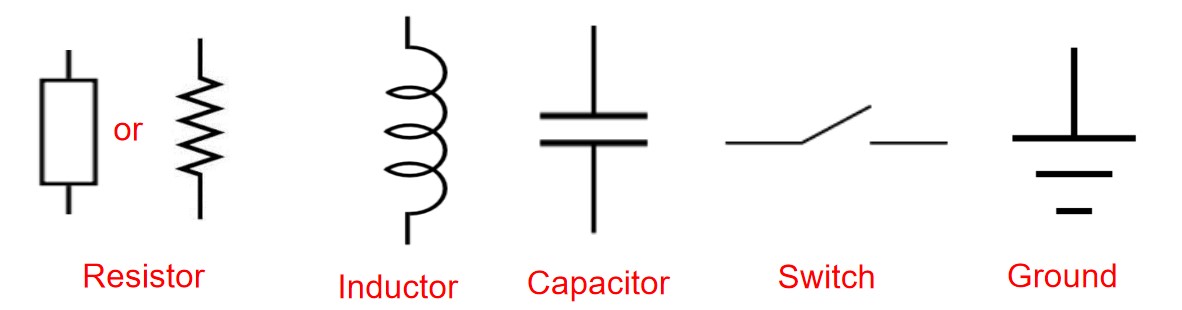

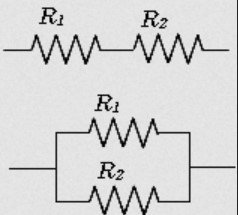

Logic gates represent logic functions in a circuit. Each logic gate below represents one of the functions shown above.

Logic Circuits

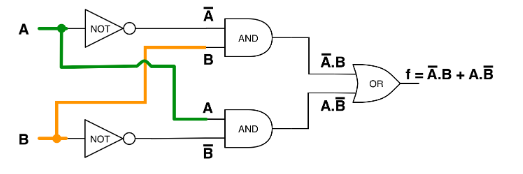

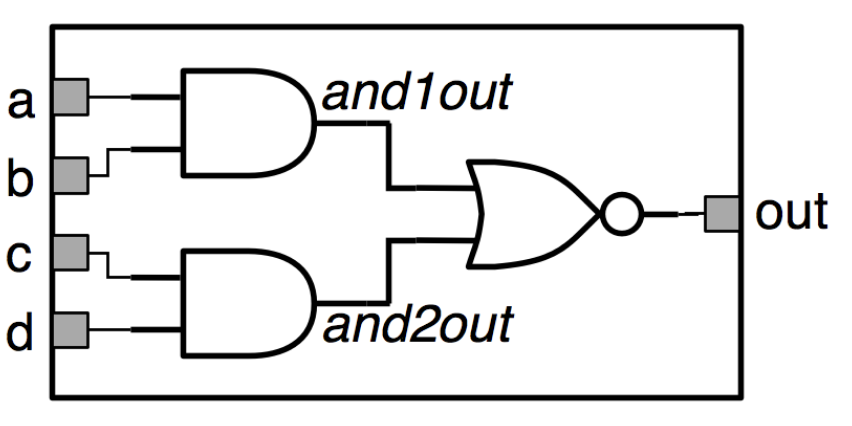

Logic circuits can be built from logic gates, where outputs are logical functions of their inputs. Simple functions can be used to build up more complex ones. For example, the circuit below implements the XOR function.

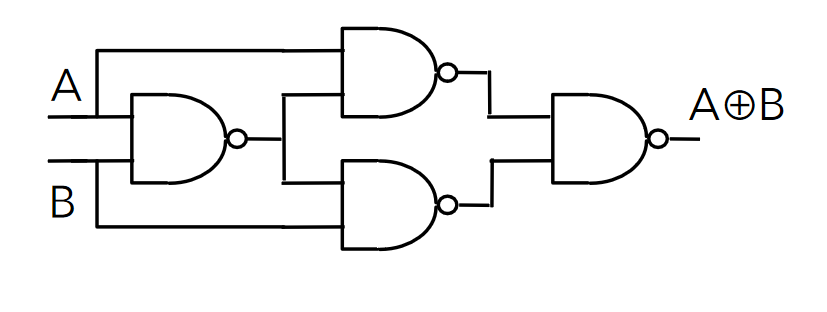

Another example, using only NAND gates to build XOR. NAND (or NOR) gates can be used to construct any logic function.

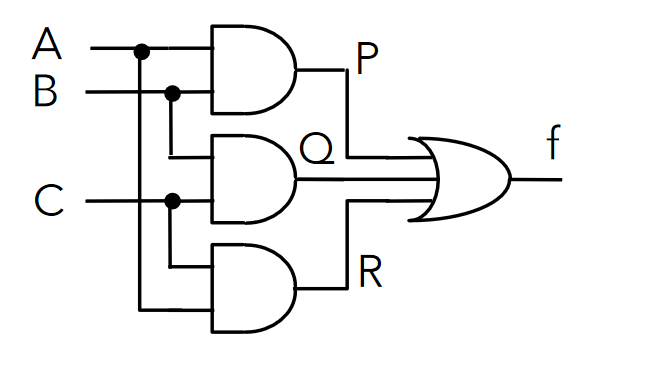

Truth tables can be constructed for logic circuits by considering intermediate signals. The circuit below has 3 inputs and considers 3 intermediate signals to construct a truth table.

| A | B | C | P | Q | R | f |

|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 0 | 1 | 1 | 0 | 1 | 0 | 1 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 1 | 0 | 0 | 1 | 1 |

| 1 | 1 | 0 | 1 | 0 | 0 | 1 |

| 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Truth tables of circuits are important as they enumerate all possible outputs, and help to reason about logic circuits and functions.

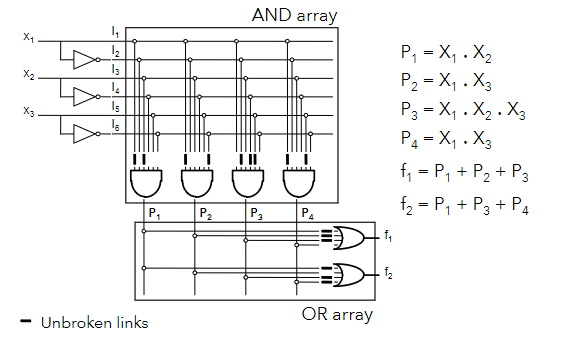

Boolean Algebra

- Logic expressions, like normal algebraic ones, can be simplified to reduce complexity

- This reduces the number of gates required for their implementation

- The less gates, the more efficient the circuit is

- More gates is also more expensive

- Sometimes, only specific gates are available too and equivalent expressions must be found that use only the available gates

- Two main ways to simplify expressions

- Boolean algebra

- Karnaugh maps

- The truth table for the expression before and after simplifying must be identical, or you've made a mistake

Expressions from Truth Tables

A sum of products form of a function can be obtained from it's truth table directly.

| A | B | C | f |

|---|---|---|---|

| 0 | 0 | 0 | 1 |

| 0 | 0 | 1 | 1 |

| 0 | 1 | 0 | 0 |

| 0 | 1 | 1 | 0 |

| 1 | 0 | 0 | 1 |

| 1 | 0 | 1 | 0 |

| 1 | 1 | 0 | 1 |

| 1 | 1 | 1 | 1 |

Taking only the rows that have an output of 1:

- The first row of the table:

- The second row:

- Fifth:

- Seventh:

- Eight:

Summing the products yields:

Boolean Algebra Laws

There are several laws of boolean algebra which can be used to simplify logic expressions:

| Name | AND form | OR form |

|---|---|---|

| Identity Law | ||

| Null Law | ||

| Idempotent Law | ||

| Inverse Law | ||

| Commutative Law | ||

| Associative Law | ||

| Distributive Law | ||

| Absorption Law | ||

| De Morgan's Law |

- Can go from AND to OR form (and vice versa) by swapping AND for OR, and 0 for 1

Most are fairly intuitive, but some less so. The important ones to remember are:

De Morgan's Laws

De Morgan's Laws are very important and useful ones, as they allow to easily go from AND to OR. In simple terms:

- Break the negation bar

- Swap the operator

Example 1

When doing questions, all working steps should be annotated.

Example 2

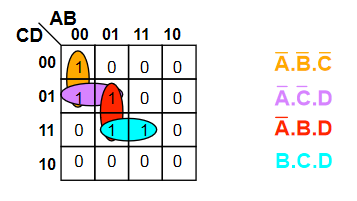

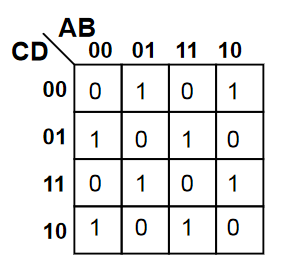

Karnaugh Maps

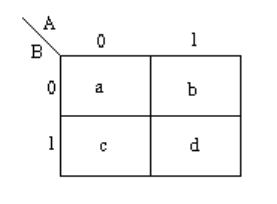

- Karnaugh Maps (k-maps) are sort of like a 2D- truth table

- Expressions can be seen from the location of 1s in the map

| A | B | f |

|---|---|---|

| 0 | 0 | a |

| 0 | 1 | b |

| 1 | 0 | d |

| 1 | 1 | c |

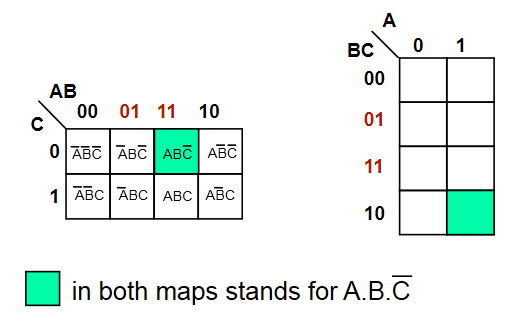

- Functions of 3 variables can used a 4x2 or 2x4 map (4 variables use a 4x4 map)

- Adjacent squares in a k-map differ by exactly 1 variable

- This makes the map gray coded

- Adjacency also wraps around

The function is shown in the map below.

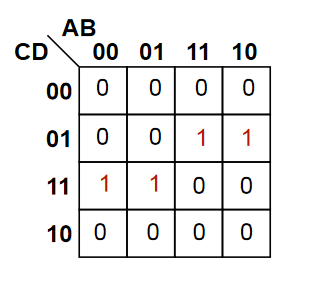

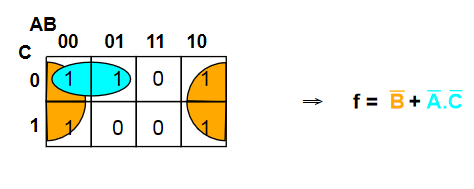

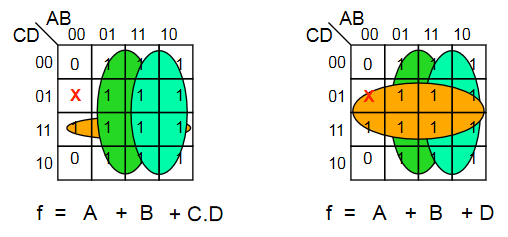

Grouping

- Karnaugh maps contain groups, which are rectangular clusters of 1s -

- To simplify a logic expression from a k-map, identify groups from it, making them as large and as few as possible

- The number of elements in the group must be a power of 2

- Each group can be described by a singular expression

- The variables in the group are the ones that are constant within the group (ie, define that group)

Sometimes, groups overlap which allow for more than one expression

The function for the map is therefore either or (both are equivalent)

Sometimes it is not possible to minimise an expression. the map below shows an XOR function

Don't Care Conditions

Sometimes, a certain combination of inputs can't happen, or we dont care about the output if it does. An X is used to denote these conditions, which can be assumed as either 1 or 0, whichever is more convenient.

Combinatorial Logic Circuits

Some useful circuits can be constructed using logic gates, examples of which are shown below. Combinatorial logic circuits operate as fast as the gates operate, which is theoretically zero time (realistically, there is a nanosecond-level tiny propagation delay).

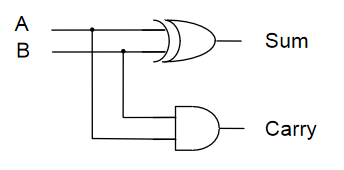

1-Bit Half Adder

- Performs the addition of 2 bits, outputting the result and a carry bit.

| A | B | Sum | Carry |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 0 | 1 | 1 | 0 |

| 1 | 0 | 1 | 0 |

| 1 | 1 | 0 | 1 |

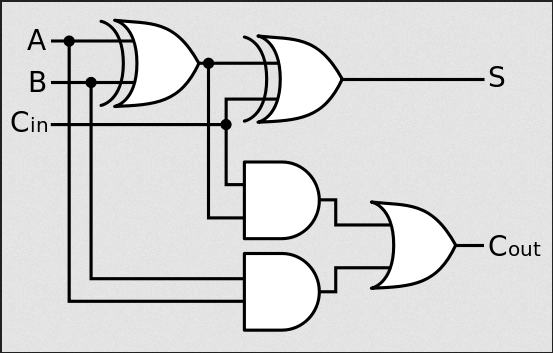

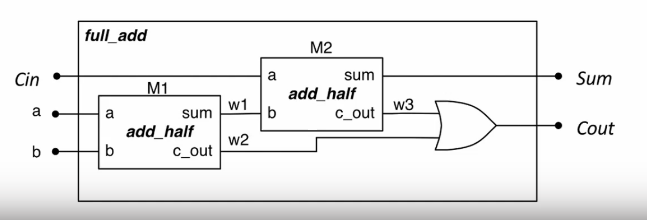

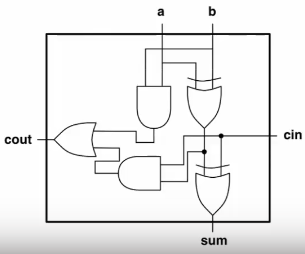

1-Bit Full Adder

- Adds 2 bits plus carry bit, outputting the result and a carry bit.

| Carry in | A | B | Sum | Carry out |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 1 | 0 | 1 |

| 0 | 1 | 0 | 0 | 1 |

| 0 | 1 | 1 | 1 | 0 |

| 1 | 0 | 0 | 0 | 1 |

| 1 | 0 | 1 | 1 | 0 |

| 1 | 1 | 0 | 1 | 0 |

| 1 | 1 | 1 | 1 | 1 |

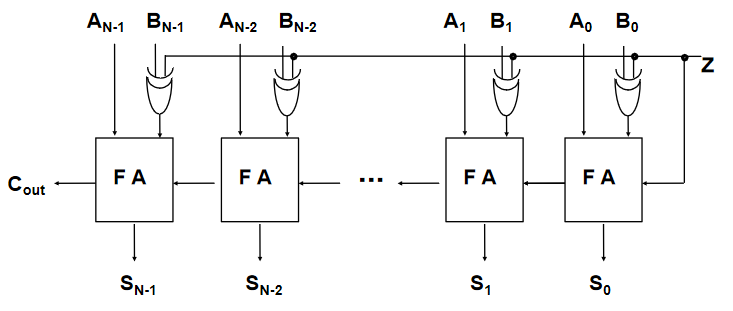

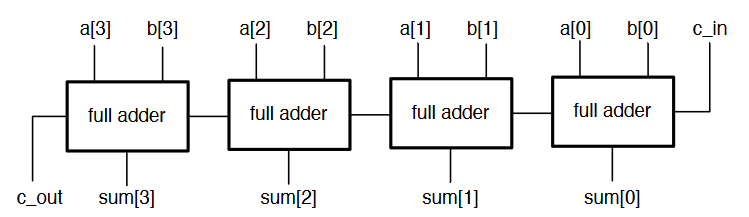

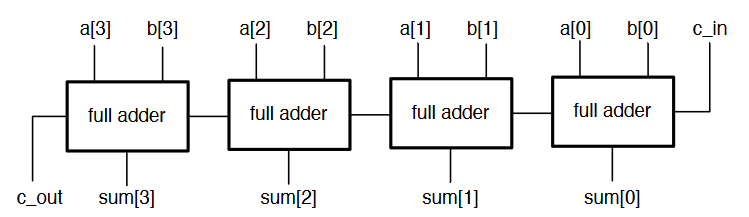

N-Bit Full Adder

- Combination of a number of full adders

- The carry out from the previous adder feeds into the carry in of the next

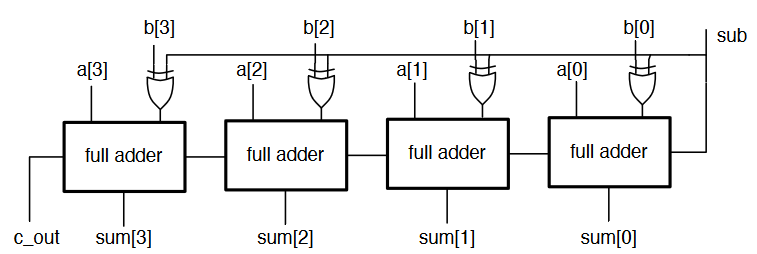

N-Bit Adder/Subtractor

- To convert an adder to an adder/subtractor, we need a control input such that:

- is calculated using two's complement

- Invert the N bit binary number B by doing

- Add 1 (make the starting carry in a 1)

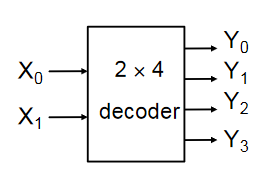

Encoders & Decoders

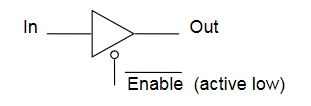

- A decoder has binary input pins, and one output pin per possible input state

- eg 2 inputs has 4 unique states so has 4 outputs

- 3 inputs has 8 outputs

- Often used for addressing memory

- The decoder shown below is active low

- Active low means that 0 = active, and 1 = inactive

- Converse to what would usually be expected

- Active low pins sometimes labelled with a bar, ie

- Active low means that 0 = active, and 1 = inactive

- It is important to be aware of this, as ins and outs must comform to the same standard

| 0 | 0 | 0 | 1 | 1 | 1 |

| 0 | 1 | 1 | 0 | 1 | 1 |

| 1 | 0 | 1 | 1 | 0 | 1 |

| 1 | 1 | 1 | 1 | 1 | 0 |

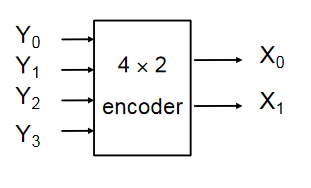

- Encoders are the opposite of decoders, encoding a set of inputs into outputs

- Multiple input pins, only one should be active at a time

- Active low encoder shown below

| 0 | 1 | 1 | 1 | 0 | 0 |

| 1 | 0 | 1 | 1 | 0 | 1 |

| 1 | 1 | 0 | 1 | 1 | 0 |

| 1 | 1 | 1 | 0 | 1 | 1 |

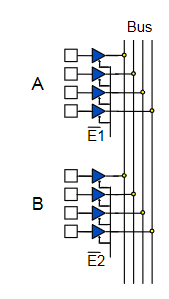

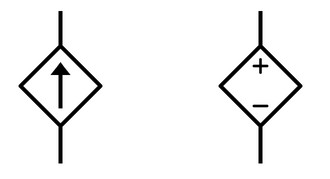

Multiplexers & De-Multiplexers

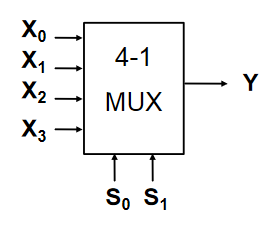

Multiplexers have multiple inputs, and then selector inputs which choose which of the inputs to put on the output.

| Y | ||

|---|---|---|

| 0 | 0 | |

| 0 | 1 | |

| 1 | 0 | |

| 1 | 1 |

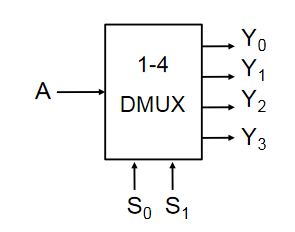

De-Multiplexers are the reverse of multiplexers, taking one input and selector inputs choosing which output it appears on. The one shown below is active low

| 0 | 0 | A | 1 | 1 | 1 |

| 0 | 1 | 1 | A | 1 | 1 |

| 1 | 0 | 1 | 1 | A | 1 |

| 1 | 1 | 1 | 1 | 1 | A |

Multiplexers and De-Multiplexers are useful in many applications:

- Source selection control

- Share one communication line between multiple senders/receivers

- Parallel to serial conversion

- Parallel input on X, clock signal on S, serial output on Y

Sequential Logic Circuits

A logic circuit whose outputs are logical functions of its inputs and it's current state

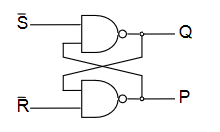

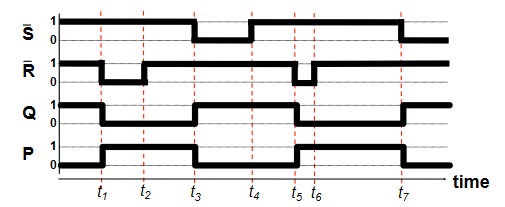

Flip-Flops

Flip-flops are the basic elements of sequential logic circuits. They consist of two nand gates whose outputs are fed back to the inputs to create a bi-stable circuit, meaning it's output is only stable in two states.

- and are active low set and reset inputs

- is set high when and

- is reset (to zero) when and

- If then does not change

- If both and are zero, this is a hazard condition and the output is invalid

| Q | P | ||

|---|---|---|---|

| 0 | 0 | X | X |

| 0 | 1 | 1 | 0 |

| 1 | 0 | 0 | 1 |

| 1 | 1 | X | X |

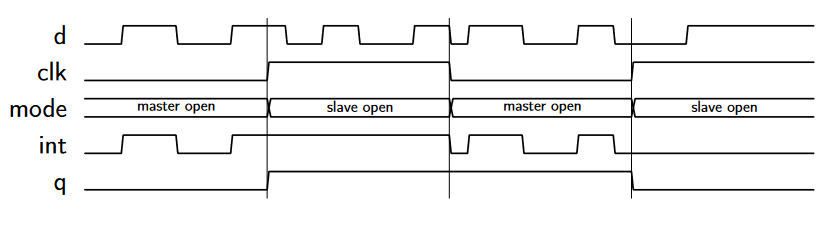

The timing diagram shows the operation of the flip flop

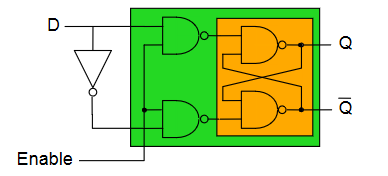

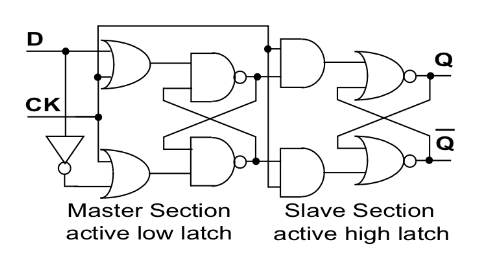

D-Type Latch

A D-type latch is a modified flip-flop circuit that is essentially a 1-bit memory cell.

- Output can only change when the enable line is high

- when enabled, otherwise does not change ()

- When enabled, data on goes to

| Enable | |||

|---|---|---|---|

| 0 | 0 | ||

| 0 | 1 | ||

| 1 | 0 | 0 | 1 |

| 1 | 1 | 1 | 0 |

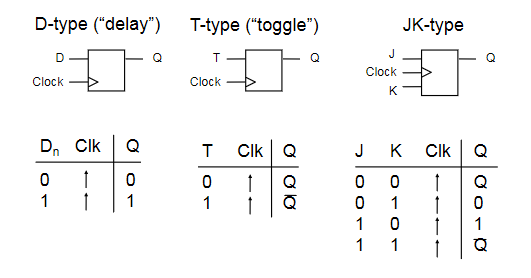

Clocked Flip-Flop

There are other types of clocked flip-flop whose output only changes on the rising edge of the clock input.

- means rising edge responding

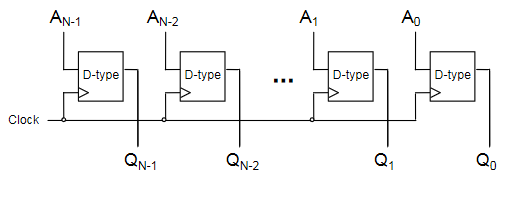

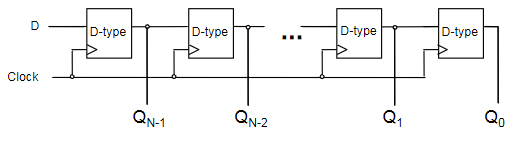

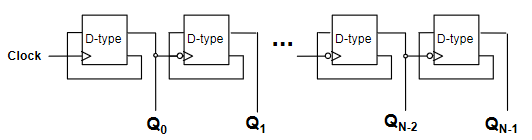

N-bit Register